Reflections on the "Youtu-Agent" architecture and the paradigm shift towards automated agent generation and hybrid policy optimization.

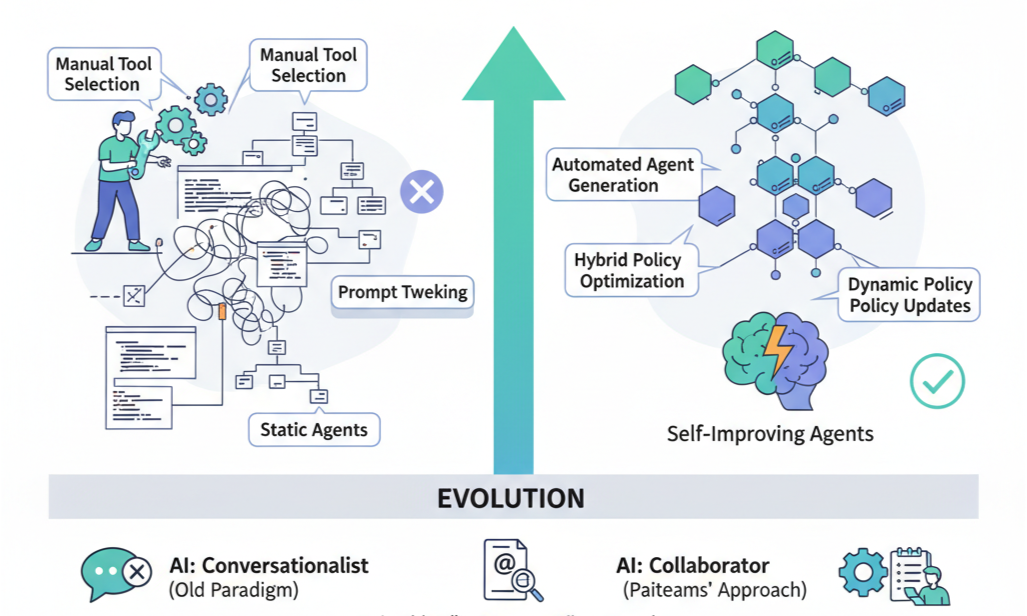

The Shift: Beyond the "Chat" Box

At Paiteams, we operate on a core belief: AI should not just be a conversationalist; it must be a collaborator. This is why we built a workspace where agents live within your notes, triggered by a simple @, ready to execute complex workflows alongside your team.

However, the entire industry faces a bottleneck. Building these agents is currently a "handicraft" process. Developers manually select tools, hard-code Python interfaces, and endlessly tweak prompts. Once deployed, these agents remain static—they don't get smarter unless you manually update them.

Recently, the Youtu-Agent research paper caught our attention. It proposes a framework that aligns perfectly with our engineering philosophy: moving from manual configuration to Automated Generation and from static deployment to Continuous Evolution.

The Breakthrough: "Generate & Evolve"

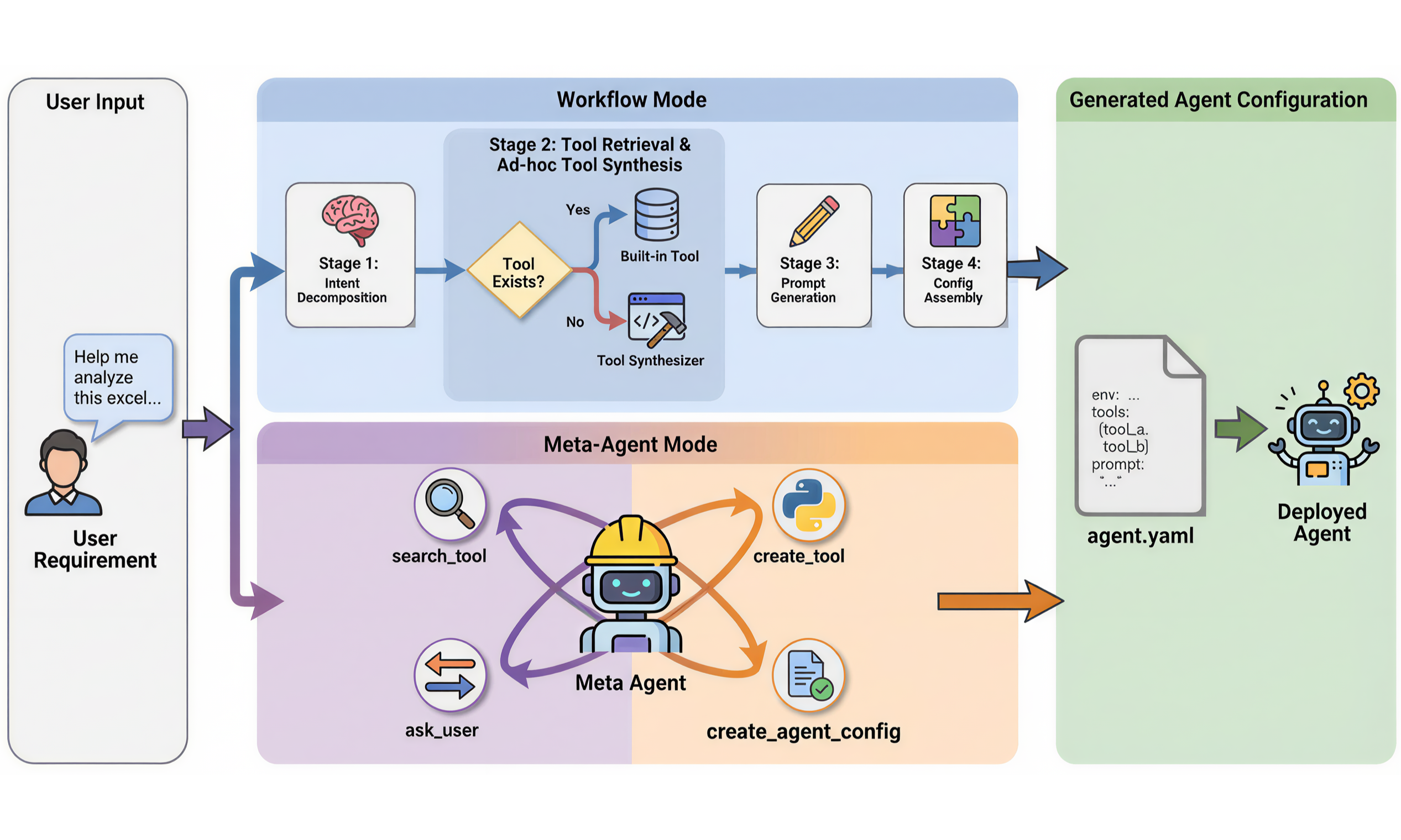

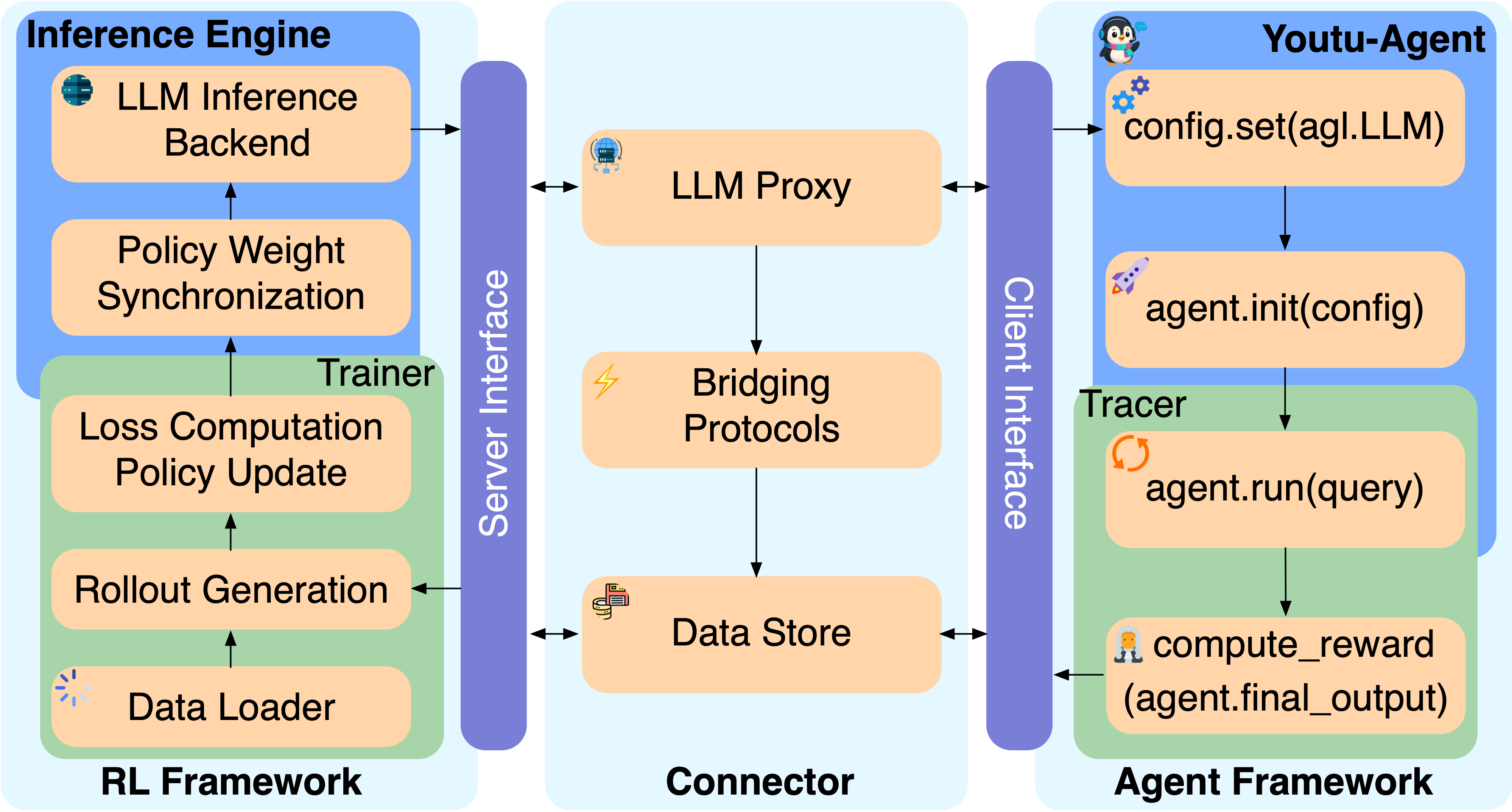

The research addresses the scalability of agent productivity by decoupling the system into three standardized layers (Environment, Tools, Agent) described via YAML. But the real innovation lies in its dual-drive mechanism:

1. Automated Generation (The "Factory")

Instead of humans writing code for every new tool, an LLM acts as a "Meta-Agent." It clarifies requirements, retrieves or writes the necessary Python code/YAML configurations, and assembles a functional agent in minutes.

- Why this matters: It reduces the marginal cost of creating a specialized agent (e.g., a specific

@MarketResearcherfor a niche industry) to near zero.

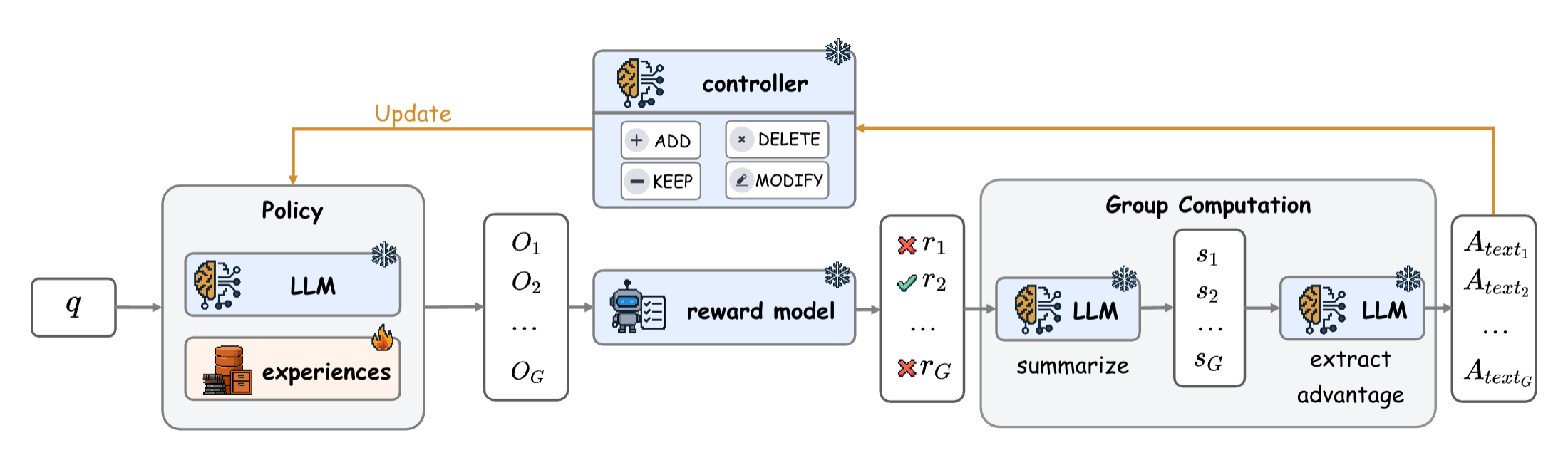

2. Hybrid Policy Optimization (The "School")

Once an agent is live, how does it improve? The paper introduces a hybrid approach:

- Agent Practice (Training-free): Using techniques like "Training-free GRPO," the agent accumulates experience from relative comparisons of its outputs, creating "text-based LoRA" (memories) that improve performance without expensive gradient updates.

- Agent RL (Reinforcement Learning): For massive-scale improvement, the system supports distributed RL, allowing agents to optimize their policies fundamentally over time.

Paiteams' Perspective: The Workspace as the Training Ground

Why are we discussing this research? Because Paiteams is the ideal environment for this evolution to happen.

In the Paiteams architecture, the "Note" is not just a document; it is the State and Context for the agent.

- Contextual Generation: When a user types

@DeepSearchin a specific project note, the context (the text in the note) can serve as the prompt for the "Meta-Agent" to dynamically generate or tune the tools required for that specific task. - Human-in-the-Loop Evolution: The "Agent Practice" mentioned in the research requires feedback. In Paiteams, when an agent generates a draft and you edit it, your edit is the feedback signal.

- We envision a future where our agents utilize this "Practice" module. If you correct the

@Copywriteragent's tone in your note, the agent captures this "experience difference" and updates its local context. The next time you call it, it has already learned.

- We envision a future where our agents utilize this "Practice" module. If you correct the

Looking Ahead

The era of static, "one-size-fits-all" AI tools is ending. We are moving towards Self-Evolving Agents that are generated on-demand and improve through collaboration.

At Paiteams, we are committed to integrating these cutting-edge methodologies—like Automated Generation and Hybrid Policy Optimization—into our Engineering stack. Our goal is simple: To provide you with an AI team that grows with you.

Reference: The insights discussed are inspired by the recent paper Youtu-Agent: Scaling Agent Productivity with Automated Generation and Hybrid Policy Optimization.